Article

Feb 6, 2026

The Ultimate AI Transformation Playbook: A Practical Roadmap for 2026 and Beyond

Learn how leading organizations are turning AI from stalled pilots into measurable business results.

AI transformation has reached a point where the data is clear enough to draw useful conclusions. The technology works. The adoption curves are steep. And the failure rates are still remarkably high. MIT's 2025 research found that 95% of generative AI pilots at enterprises haven't shown measurable financial returns within six months. Separately, Bain's analysis puts the broader business transformation failure rate at 88%.

These numbers exist alongside equally real success stories. Google Cloud's 2025 ROI of AI report found that among executives whose organizations have deployed AI agents in production, 74% reported achieving ROI within the first year. And 39% of those reporting productivity gains said productivity at least doubled.

The gap between these two realities contains the entire strategic challenge. This playbook is built to help you navigate that gap covering what AI transformation actually requires, the specific points where organizations tend to stall, and the operational steps that separate measurable outcomes from expensive experiments.

What Is AI Transformation?

AI transformation refers to the structural integration of artificial intelligence into how an organization operates, makes decisions, and delivers value. It's distinct from AI adoption, which typically describes individual teams or employees using AI tools within existing workflows.

The distinction matters because the outcomes are different. Deloitte's 2026 enterprise AI report quantifies this clearly: about one-third (34%) of surveyed organizations are using AI to deeply transform creating new products and services or reinventing core processes. Another 30% are redesigning key processes around AI. The remaining 37% are using AI at a surface level, with little change to existing processes. All three groups are capturing some productivity gains, but only the first group is building structural competitive advantage.

For a detailed breakdown of the concept and a step-by-step framework, read our full guide on AI transformation definition and roadmap.

Why Most AI Transformation Projects Fail

The failure rates in AI are high enough to be systemic. Understanding why they fail is more useful than understanding why the few succeed, because the failure patterns repeat with almost structural consistency.

The Data Readiness Problem

The single most common blocker is data. Gartner's research on AI-ready data predicts that through 2026, organizations will abandon 60% of AI projects not supported by AI-ready data. Their survey found that 63% of organizations either don't have or aren't sure if they have the right data management practices for AI.

This plays out in a specific way. A company selects an AI tool, connects it to existing data sources, and gets inconsistent or unreliable outputs. The conclusion is usually that the tool doesn't work. The actual issue is that the data feeding it is fragmented, poorly labeled, or inaccessible across systems. According to research compiled by Integrate.io, organizations with poor data quality see 60% higher project failure rates than those with strong quality programs. And the average enterprise runs 897 applications but only 29% are integrated creating massive data silos that AI systems can't bridge on their own.

For a closer look at cost factors that derail AI initiatives, including data infrastructure, see our breakdown of the hidden costs that cause AI projects to fail.

The Strategy Problem

The second systemic failure point is strategic direction. PwC's 2026 AI predictions describe the pattern clearly: many companies take a bottom-up approach, crowdsourcing AI initiatives across teams and then attempting to shape them into a coherent strategy after the fact. The result is projects that may not match enterprise priorities, are rarely executed with precision, and almost never lead to transformation.

The organizations that are seeing results have moved to a top-down model. Leadership identifies specific workflows or business processes where AI's payoff can be significant, then applies the right resources like talent, technical infrastructure, change management through a centralized structure. PwC calls this an "AI studio": a hub that brings together reusable components, frameworks for evaluating use cases, sandbox environments for testing, and deployment protocols.

This doesn't require micromanagement of every AI initiative. It requires that the strategic direction where AI gets deployed, what problems it addresses, and how success gets measured is owned at the leadership level.

How to Build a Solid AI Transformation Strategy

The organizations with the best outcomes share a common starting point: they begin with business problems, not AI capabilities. This sounds simple, but MIT's research shows it's the exception. Of the organizations they studied, 60% evaluated enterprise-grade AI tools, but only 20% reached pilot stage and just 5% reached production. The core barrier to scaling, according to MIT, is not infrastructure, regulation, or talent. It's learning most AI systems don't retain feedback, adapt to context, or improve over time.

That finding has a practical implication: the strategy can't be about deploying technology. It has to be about building the conditions under which AI can learn, improve, and deliver compounding value.

Identifying High-Impact Workflows

The most effective way to prioritize AI use cases is to map organizational friction first. Where are decisions bottlenecked by slow data access? Where are skilled people spending time on repetitive tasks that follow clear rules? Where do errors happen because of manual handoffs between systems?

Those friction points are where AI tends to deliver the most immediate, measurable impact. Research from Google Cloud's ROI of AI report supports this: the executives reporting the highest ROI from AI agents are those who deployed them against specific, well-defined workflows rather than broad automation mandates. Successful deployments focus on specific domains where the problem is understood, the data exists, and the outcome is measurable.

Setting Up Governance Early

One finding from PwC's 2025 Responsible AI survey stands out: 60% of executives said responsible AI practices boost ROI and efficiency, and 55% reported improved customer experience and innovation. But nearly half also said that turning those principles into operational processes has been a challenge.

The practical lesson is that governance needs to be built into the AI strategy from the beginning, not added after deployment. At minimum, this means defining who owns each AI initiative, what data it's allowed to access, and who is accountable for outcomes. The World Economic Forum's analysis on AI governance frames governance as a growth enabler: organizations that embed it early avoid the fragmentation and compliance risk that slows everything down later.

Building the Roadmap Step by Step

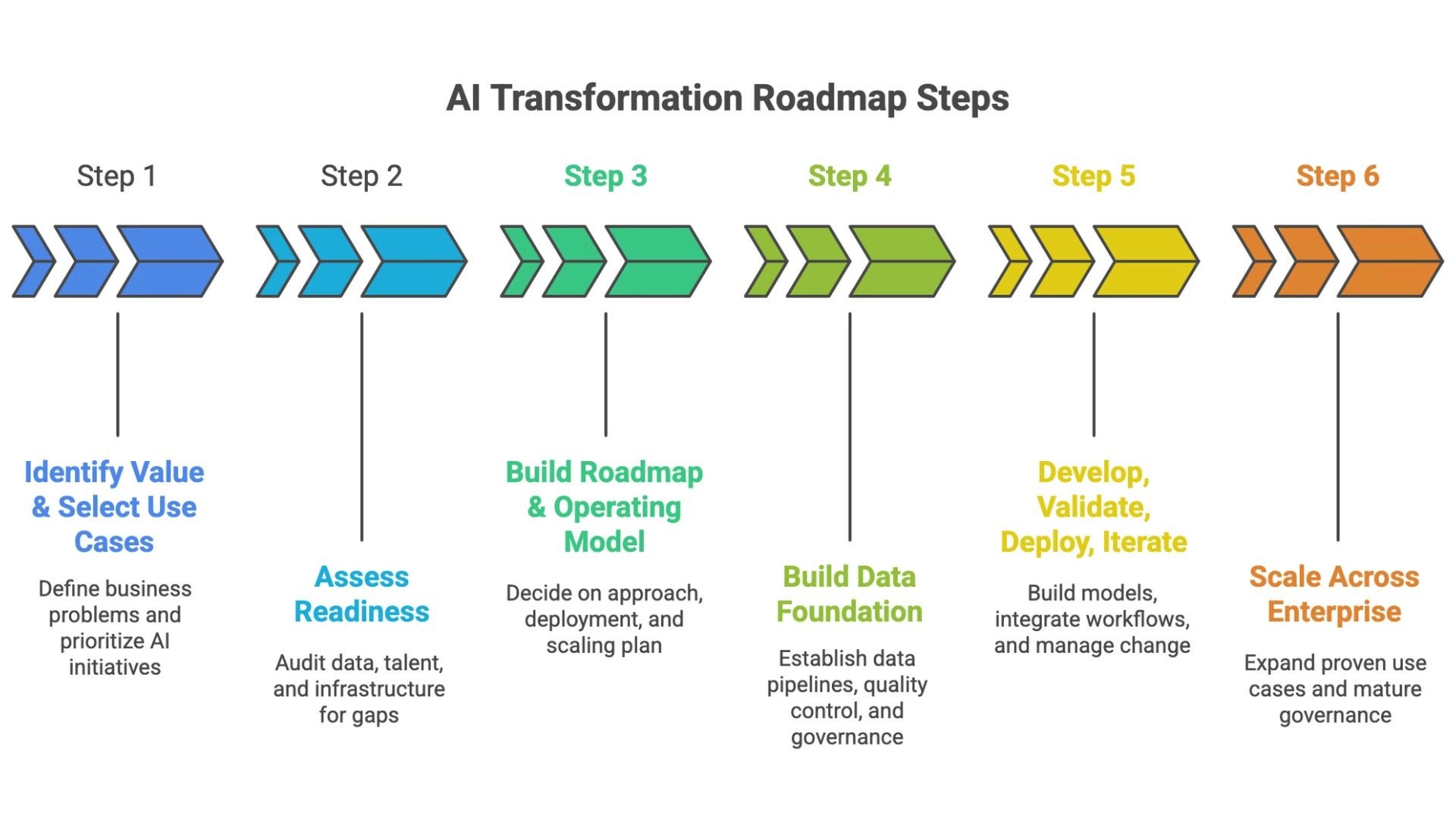

Below is the 6-step AI transformation roadmap we use at Novoslo. It's designed for operationally complex organizations that need to balance quick wins with long-term infrastructure.

Step 1: Identify value and select use cases.

Start with the business problem. Tie every initiative to a specific objective, reducing churn, speeding up approvals, cutting manual data entry. Prioritize by impact and feasibility, and assign a clear owner to each use case.

Step 2: Assess readiness.

Conduct an honest audit of your current resources. Is the data accessible and clean? Do you have the talent to build or manage AI systems in-house? Identifying gaps early is what prevents stalled pilots three months in.

Step 3: Build the roadmap and operating model.

Decide your approach whether it is buy, build, or partner. Define deployment (cloud vs. on-prem) and map your scaling plan. MIT's research found that companies using purchased AI tools or strategic partnerships succeeded roughly 67% of the time, while internal builds succeeded about a third as often. That's a meaningful variable when structuring your approach.

Step 4: Build the data foundation.

Establish pipelines for data collection, quality control processes, and governance protocols. Gartner's data shows that companies with strong data integration achieve 10.3x ROI from AI initiatives versus 3.7x for those with poor connectivity. The data layer is where the multiplier effect sits.

Step 5: Develop, validate, deploy, and iterate.

Build or configure models. Integrate them into actual workflows, not sandboxes. And invest in change management. This is where organizations learn what MIT identified as the real scaling barrier: can the system learn, adapt, and improve in context?

Step 6: Scale across the enterprise.

Once a use case is proven, expand it across functions. Move from a sales pilot to a marketing rollout. As you scale, mature your governance to handle increased complexity.

For the full deep-dive on each step, read our complete workflow-first guide to implementing AI.

Stages of AI Implementation

The transition from strategy to production is where most organizations encounter the hardest problems. The research suggests three distinct stages, each with specific patterns of success and failure.

Running Pilot Projects That Produce Useful Data

The MIT study surfaced a specific insight about pilot structure: startups reported average timelines of 90 days from pilot to full implementation. Enterprises took significantly longer. The difference wasn't resources it was scope. Enterprises tended to define pilots broadly, without clear exit criteria.

A pilot that's designed to produce a decision continue, modify, or stop within 60-90 days requires three things before it starts: a specific business metric it's trying to move, a defined timeline, and someone who is accountable for the outcome. Without all three, pilots tend to drift into extended experiments that consume budget without generating the data needed to justify scaling.

Scaling Proven Use Cases

This is where the real ROI lives, and where most companies plateau. Deloitte's data shows 66% of organizations reporting productivity and efficiency gains from AI. But only 20% are achieving revenue growth. The remaining gains are real but modest some efficiency here, some capacity there.

The difference between modest gains and structural transformation is whether the organization redesigns workflows around AI or simply layers AI onto existing processes. One enterprise client we worked with doubled their sales efficiency by using AI-driven insights to change how the team prioritized, engaged, and followed up with leads. The process itself changed. The AI was the catalyst, but the redesign was the value.

Palo Alto Networks offers a concrete example at scale: their CIO reports that automated IT operations jumped from 12% to 75% over 18 months, halving IT operations costs. The key was selecting initiatives against three criteria velocity, efficiency, and improved experience then using AI to fundamentally rethink how those functions worked.

Institutionalizing AI Across the Organization

At some point, AI needs to stop being a project and start being embedded in how the organization operates. This means AI considerations are built into budgeting cycles, governance is part of performance reviews, and the workforce is evaluated partly on its ability to work alongside AI systems.

Deloitte's survey found that the AI skills gap is now the biggest barrier to integration, and education was the primary way companies adjusted their talent strategies. The organizations moving fastest are managing workforce strategy and AI strategy together not as parallel tracks, but as a single integrated plan.

AI and the Future Workforce

The World Economic Forum's workforce research estimates that roughly 1.1 billion jobs could be transformed by technology over the next decade, and AI will affect 86% of businesses by 2030. Their analysis suggests AI will create more jobs than it displaces but only if companies invest deliberately in people and redesign work, rather than layering technology onto old structures.

Rethinking Roles at the Task Level

The practical framework emerging from research is task decomposition. Roles are broken into individual tasks, and each task is evaluated: can AI handle this reliably? Does it require human judgment? Is it a hybrid where AI supports but a human decides?

HCLTech provides a scaled example. Over the past year, nearly 80% of their employees were trained in core skills, with over 115,000 building digital capabilities and more than 116,000 trained in generative AI. The goal was shifting from pure services execution toward solution and IP-led value creation. That's a structural workforce change, not just a training initiative.

What AI Changes About Hiring and Organizational Structure

As AI absorbs routine execution tasks, organizational structures are beginning to flatten. Deloitte's research notes that some companies are merging technology and people-leadership functions to ensure systems and workforce design evolve together. Roles, skills, and career paths are being rebuilt rather than adjusted.

For operations leaders, this means hiring criteria need to account for adaptability and AI literacy alongside domain expertise. IDC projects that by 2026, 40% of all G2000 job roles will involve working with AI agents, fundamentally changing what entry, mid, and senior level positions look like.

Ensuring Ethical and Responsible AI Use

The regulatory environment has moved from theoretical to enforceable. The EU AI Act is now in force, with rules for high-risk AI taking effect in August 2026. Non-compliance can result in fines up to €35 million or 7% of global annual turnover. In the US, the landscape is fragmented but active state-level requirements in California and Texas, plus NIST's AI Risk Management Framework providing a voluntary but widely referenced standard.

Governance That Scales

Deloitte's survey found that only one in five companies currently has a mature model for AI governance, even as usage scales rapidly. This creates a growing gap between what organizations are deploying and what they can reliably oversee.

Effective governance at this stage follows a structured approach: catalog every AI application in use across the enterprise, classify each by risk level, define accountability for each system's outputs, and build monitoring into the deployment lifecycle. The "map, measure, monitor" framework mapping activities, measuring results against objectives, and monitoring quality continuously keeps governance practical as the number of AI applications grows.

The Business Case for Transparency

Governance is often framed as a compliance cost, but the data increasingly shows it's a competitive advantage. Gartner predicts that by 2026, AI models from organizations that operationalize transparency, trust, and security will see a 50% increase in adoption, business goals, and user acceptance. And PwC's survey found that 60% of executives credit responsible AI with boosting ROI and efficiency.

The practical takeaway is that if you're deploying AI in any customer facing or compliance sensitive area, documentation, monitoring, explainability, and human oversight aren't optional extras. They're the conditions under which the system earns trust from regulators, from employees, and from customers.

Future Trends Shaping AI Transformation

Two developments are likely to define the next phase of enterprise AI. Both are already visible in the data.

Agentic AI Moving Into Production

The shift from AI as an assistant to AI as an operator is accelerating. AI agents differ from chatbots in a specific way: they can understand a goal, develop a multi-step plan, take actions across systems, and continue until the outcome is achieved under human oversight.

Gartner's projection of 40% enterprise app integration by end of 2026 is one marker. Another comes from KPMG's Q1 2025 survey of C-suite leaders: 65% of respondents said they've progressed from early experimentation into fully-fledged AI agent pilot programs, up from 37% the previous quarter.

The organizations best positioned to benefit are those that already have their data foundation, governance framework, and operating model in place. Agents amplify whatever infrastructure already exists strong foundations lead to strong outcomes, and weak foundations lead to faster exposure of existing problems.

Industry-Specific Patterns

AI transformation looks different by industry, but the patterns of success and failure are consistent.

In financial services, AI-powered fraud detection systems are processing thousands of data points per transaction. Mastercard improved fraud detection by an average of 20%, and the US Treasury used AI to prevent or recover $4 billion in fraud in fiscal year 2024. Insurance has shown the fastest adoption curve of any regulated sector, moving from 8% full AI adoption in 2024 to 34% in 2025.

In healthcare, the volume of investment is high but the failure rate is even higher over 80% of AI healthcare projects don't deliver on promises. The core issue is data fragmentation and regulatory complexity, both of which require heavier upfront infrastructure work than most organizations budget for.

In manufacturing and logistics, embodied AI and autonomous systems are entering production environments. Agentic systems are handling loading, sorting, inventory management, and shipment routing with increasing autonomy, particularly in controlled environments where the workflow is well-defined.

The common variable across all sectors is organizational readiness. The technology is broadly available. What separates outcomes is the data quality, governance structure, and change management capacity underneath it.

FAQ

What is the difference between AI adoption and AI transformation?

AI adoption means individual teams or employees are using AI tools usually within existing workflows. AI transformation is when AI changes the structure of how an organization operates: processes, decision-making, and sometimes business models. Deloitte's 2026 data shows that while most companies are adopting AI, only about a third are using it to genuinely redesign how their business works. The remaining two-thirds are capturing surface-level efficiency gains without building lasting competitive advantage.

How long does AI transformation take to show ROI?

It depends on scope. Tightly defined use cases can show returns within 3-6 months. Google Cloud's research found 74% of organizations deploying AI agents in production achieved ROI within one year. Broader, transformational initiatives, the kind that redesign workflows and operating models typically follow a 2-4 year timeline. The companies seeing the fastest returns tend to start with high-friction, well-defined processes and expand from there, rather than pursuing organization-wide transformation all at once.

What is the biggest barrier to successful AI implementation?

Research consistently points to data readiness. Gartner found that 63% of organizations lack the data management practices required for AI, and predicts 60% of AI projects will be abandoned by 2026 due to inadequate data foundations. Beyond data, the MIT study identifies learning as the deeper constraint most enterprise AI systems don't retain feedback or adapt over time, which limits their ability to compound value. Governance and change management rank as the next most common blockers.

Should we build AI systems in-house or partner with vendors?

MIT's research found that companies purchasing AI tools from specialized vendors or building strategic partnerships succeeded roughly 67% of the time, while purely internal builds succeeded about a third as often. Deloitte's agentic AI research reinforces this: pilots built through partnerships are twice as likely to reach full deployment. Internal builds can work, but they require significantly more time, specialized talent, and infrastructure investment. A hybrid approach partnering for the platform, customizing for your workflows tends to balance speed with control.

How do we know if our organization is ready for AI transformation?

Readiness comes down to four factors: data accessibility (is your data clean, integrated, and structured?), governance maturity (do you have accountability and oversight frameworks in place?), talent capacity (can your team build, manage, or at least work alongside AI systems?), and leadership alignment (does executive leadership own the AI strategy?). Cisco's AI Readiness Index found that only 32% of organizations rate their IT infrastructure as fully AI-ready, and just 23% consider their governance processes prepared. A structured AI opportunity assessment can help identify specific gaps and prioritize next steps.

The Bottom Line

AI transformation is a multi-year operational change, and the organizations pulling ahead are the ones that treat it that way. The research is clear on what the foundation requires: start with specific, high-friction business problems. Build the data layer before buying tools. Establish governance early. Invest in people and change management alongside technology. And measure outcomes continuously rather than waiting for a single ROI moment.

The 5% of companies that MIT identified as succeeding with AI share a common trait: they treat AI as a way to redesign how work gets done, not as a technology to layer onto existing processes. That's the shift that separates modest efficiency gains from structural competitive advantage.

If you're early in the process and want to understand where AI could deliver the most value across your business, identify AI opportunities with a structured assessment built to give you personalized recommendations and ROI projections based on your specific operations.